The Ever Changing World of the

DIT and Digital Cinematography.

As the 10th anniversary of

the Red One Cinema camera approaches, I thought it would be a good

time for an overview of the changing roles of the DIT and

Cinematographer in 2016.

The purpose, other than an overview of

the recent changes in technology and practice is to bring more

awareness to the current application of the DIT and to aid the

progression of cinematography today.

The role of the DIT (Digital Imaging

Technician) is one of the most ill-defined roles in cinematography,

which is as equally surprising as it is unsurprising. It's surprising

as the role has been around (in current present form) for roughly 10

years - essentially since the introduction of the Red One Cinema

camera in 2006. It is unsurprising as the nature of technology and

budgets have developed at such an alarming rate that dramatic changes

to duties and expectations have arisen. Really, the role has been in

flux since its earliest days and continues to do so, making it very

hard to define.

Starting Out

My first introduction to the Red camera

was in very early 2007. It had not been officially released then but

a lot had been written about its potential on the internet. At that

time I'd been working between being a 2nd AC and Video

Assist Operator on 35mm commercials and feature films. Very early

days for my career and being very enthusiastic, I would scour the

internet for information on all camera formats. I was surprised that

not many of the people I was working with had heard about this new

camera, or those who had just dismissed it as 'vapourware'. In 2007

film was still the gold standard, though things were changing.

I'd

worked as a daily 2nd AC on a shoot in 2007 that had shot

on the Viper Filmstream. The Viper was a pioneering digital camera

but dismissed by most as an unworkable novelty as it was tethered to

a very large series of hard drives. Very restrictive. The less

restrictive Red camera however gradually began to be used on

productions throughout America and word soon spread over here, mostly

in hushed tones of an industry changing camera/silly toy depending on

your stance. As an aside, Marc Dando owned that Viper camera and

would soon go on to dominate the industry as the creator of Codex.

By chance two simultaneous productions

occured which would change my career path in early 2008. One, was a

short film that a friend directed. This friend had received a small

amount of funding (there actually were such things at the time) and

managed to persuade the very talented Robbie Ryan to DP. He also

suggested that Robbie shoot on the Red, which was obviously very

exciting to me on both counts. I was hired as the 2nd AC

(though ended up being the 1st part way through). Unlike

other shoots I'd been on, a technician came with the camera. This

was strange to me but as it transpired, completely necessary.

Although we had spent a lot of time looking at Red manuals and

tutorials, in the heat of a shoot it was very easy to forget where

menus were (Red never being good for menus) and actually no idea what

to do with the Red Drives once they had been shot out. Our

technician would copy these drives onto computer drives overnight

(this seemed very scary and technical). Everything being new I would

spend every spare opportunity memorising the menus, asking questions

about downloading the data and reading about every new firmware

update (which Red would release to make the camera work properly). I

believe we were on firmware 13 at the time.

By chance two simultaneous productions

occured which would change my career path in early 2008. One, was a

short film that a friend directed. This friend had received a small

amount of funding (there actually were such things at the time) and

managed to persuade the very talented Robbie Ryan to DP. He also

suggested that Robbie shoot on the Red, which was obviously very

exciting to me on both counts. I was hired as the 2nd AC

(though ended up being the 1st part way through). Unlike

other shoots I'd been on, a technician came with the camera. This

was strange to me but as it transpired, completely necessary.

Although we had spent a lot of time looking at Red manuals and

tutorials, in the heat of a shoot it was very easy to forget where

menus were (Red never being good for menus) and actually no idea what

to do with the Red Drives once they had been shot out. Our

technician would copy these drives onto computer drives overnight

(this seemed very scary and technical). Everything being new I would

spend every spare opportunity memorising the menus, asking questions

about downloading the data and reading about every new firmware

update (which Red would release to make the camera work properly). I

believe we were on firmware 13 at the time.

Production two happened the week after.

A commercials production company I worked for as a 2nd AC

had decided they'd had enough of shooting 35mm and wanted to try the

Red instead. By chance they had a shoot lined using the Red and they

were wondering if I would be interested in helping. This allowed me

even more experience working with Red in a short space of time.

Throughout the year and into next I'd

moved from being an average/not great 35mm AC to an in demand

AC/Technician working with digital cameras. Ironically this gave me

more experience on larger 35mm shoots where I became a little better

as digital cameras were being used for certain shots, and by then,

not just Red. I distinctly remember the laughter (myself included)

when a director wanted to use his newly bought Canon 5D2 for a shot

as it shot video (30FPS HARD at that time) – little did everyone

know.

Then something unexpected happened. A

union strike in America had the unintended result of nearly everyone

switching to digital productions for TV overnight. This would change

the way films were made forever. Nobody expected a change to happen

so quickly, least of all Kodak and feature DP's. Over a very small

period of just two/three years 95% of productions had switched to

shooting digitally – crazy to think as 35mm had been used for 95%

of cinemas 100 years. 35mm Productions would 'step down' and

S16/HDCam productions would 'step up'. A perfect storm had happened.

Steven Soderbergh, early adopter.

Obviously there was complete chaos.

Producers, some cinematographers and AC's and especially post

production companies were struggling to make headway into this new

digital world. The 35mm workflow was essentially universal but the

digital workflow would change for every production. And that

uncertainty was confusing for everyone.

And this is where part of the confusion

as to what a DIT does is arises from.

Generally a DIT then would be a

technician for a camera – mostly as AC's were more used to film or

ENG Style Video Cameras – and importantly download the data. AC's

are smart people and very quickly they would come to operate the

camera menus/set-ups themselves. Only the copying of data cards/mags

was now left to the DIT. Backed up data would be sent of to large

post houses, and really dealt with in a similar way to film being

sent to a lab. Not all post houses however had the same set of

skills, which caused issues which will be discussed later.

The first DIT confusion for

producers/production managers was that certain DPs expected the DIT

to have a good understanding of how the camera worked on a sensor

level and be able to offer advice on ASA settings, colour

temperature, exposure etc - things the DP did not need to think about

too much themselves as they had so many other things to do. Other

DP's or AC's were more technically aware of how cameras operated so a

DITs knowledge of finer technicalities was not needed so much in

those cases. Confusion would occur between a need for a data

wrangler and a DIT, but mostly DIT was still a catch-all term.

Classic early DIT set-up

As the downloading of data very quickly

changed from a dark art to 'pretty much anyone with a laptop can do

it' the role of the DIT started to become more cause for concern.

Producers did not really have the time to spend hours working out

what a DIT really did. Like every other crew position they relied on

CV, or word of mouth to ascertain someone's suitability. By this time

everything had turned a bit 'wild west'. Runners or Trainees were

given roles as DITs on some productions. This would be fine for one

job but cause all sorts of problems on others where more technical

knowledge was expected. Soon lots of horror stories would appear

such as cameras not working (in a lot of cases down to user error),

rushes going missing or some DITs thinking their job was a bit more

important than it was. But as operations quickly became simpler and

clearer; cameras easier to use and workflows more generalised

productions inevitably (and rightly) started to employ less

experienced DITs with good results in general.

And this is where Post Houses come in

and the DIT was reborn.

The role develops

It's a myth that shooting film resulted

in a perfect floor to post workflow. From the popularity of Telecine, dailies were always interpreted by operators. From a personal

perspective, as a 2nd AC I recall a DP having stern words

with me after forgetting to write 'Night/Int' on a particular shot.

The TK operator had interpreted this scene as 'DAY/INT' and graded

appropriately. Most instructions were written on Neg Report sheets

but were very vague – 'Good, Strong Blacks', 'Make Warm', 'Cool

Morning' etc. Discussions and tests were shot before hand but

Telecine Operators were very experienced, DP's tended to shoot 'in

camera' so results were usually consistently good. For features most

DPs were used to shooting pre-DI where they pretty much got the

intended look on the day.

Initially many of the filmlabs (like

Kodak) did not want to enter the 'video' market, so pre-existing

boutique, general or new operations dealt with the data from Digital

Cameras. With very inconsistent results. Like the DIT Wild-West there

was a Post Wild-West. Some of the top-end houses produced excellent

results, some of the brand new operations produced excellent results

but many produced very poor results. There were a lot of very poor

results which caused DPs skills to be questioned more than post house

inadequacies. What was so frustrating about the situation was that

very early on, Red had clearly seen the future and actually (via

Assimilate initially) implemented some very clever methods for

maintaining a consistent look from the camera floor to the edit

suite. Unfortunately this look information was not always

successfully passed on, and ironically one of the main reasons for

this confusion was REDs forward thinking. In thinking so far ahead,

they had stubbornly pushed their custom workflow, which was not

compatible with what existed in most production houses. This would

be their Achilles heel and the main reason that to this day people

associate Red with a difficult workflow. In making the camera itself

cheap for the end user, they had licensed many of-the-shelf

components to make this possible and it became clear that this was

actually a unique new way to shoot cinema. The Red was a camera

which was 50% onset cinematography and 50% post cinematography. This

would be where the next revolution in cinematography would be – and

currently is now.

The original Redcine (before X), which was pretty much Scratch!

What Red had done was produce a camera

that shot raw data ie. data/images that would be finalised after

shooting. In real terms this meant that unlike film which can only be

developed once, Red Raw data can be developed again and again –

even years after when the algorithms to do so have improved. But to

do this caused a MASSIVE amount of processing power. A standard

computer from 2007 could take weeks to process this data into useable

images. As a result, only large companies with expensive computers

could turn around dailies quickly enough to please customers. So for

a while, Red processing existed only in the realms of large post

houses. The Red Meta Data sent was often ignored, and to keep later

post costs down, the raw data was transferred to various DPX (some

linear, some log) files for editing/grading. The power of raw was

lost in many cases and results were very variable.

As previously mentioned Red licensed

some technology to help them keep costs down, one of these products

was Red Rocket, a GPU that accelerated the raw data processing. It

was very expensive and it also meant that for years Red were tied

into using it alone, long after computer processing had caught up

(they opened up GPU processing a couple of years ago). But what it

did do then was allow the processing of rushes to be taken out of

Post Houses and onto Set. This would a) give DITs a new lease of

life but more importantly b) further cinematography by giving control

back to the DP. The DP could now authorise rushes before they were

sent to editorial.

Obviously this did not go down too well

with post houses, as they were losing a good chunk of income. For a

while there existed a mix of DITs backing up and transcoding rushes,

syncing sound and delivering dailies. There were also experiments

between continuing as before, or some cases where all rushes were

sent of to a post house. Both outcomes were good. DITs had

re-invented themselves and post houses produced better quality

dailies. Rather than laptops for downloading Red CF cards, 12-Core

Mac Pro Towers were needed. But the lab had arrived onset for the

first time. And that brought about a new set of creative

possibilities.

Arrival of new systems

Around then the Alexa arrived. The Red

was suddenly old hat. Arri was known to most cinematographers,

especially in Europe through their range of film cameras. It was well

built, the menus and buttons were in the correct places (being

German), its specs were way ahead of the Red (pre MX) and more

importantly it had been designed to fit into existing workflows.

Almost. It initially shot Log encoded ProRes only (raw to come

later).

Around then the Alexa arrived. The Red

was suddenly old hat. Arri was known to most cinematographers,

especially in Europe through their range of film cameras. It was well

built, the menus and buttons were in the correct places (being

German), its specs were way ahead of the Red (pre MX) and more

importantly it had been designed to fit into existing workflows.

Almost. It initially shot Log encoded ProRes only (raw to come

later).

Nearly everyone could edit straight away with the files it

created (well the 422HQ iteration at least, 4444 being trickier)

except for the fact that everything looked washed out. That was

because the camera encoded the linear sensor as LogC. Log had been

developed for DI technology so was only known within a small field.

To view the images properly as was seen onset a LUT (look up table)

was required in post and a new set of terminology was suddenly

introduced into wider cinematography. Along with Rec709. Now

everyone knows what LUTs are, then it was a mystery. Very few pieces

of software could work with them, usually only very high end

finishing packages like Iridas and Baselight. For the first few

months very convoluted methods were utilised to apply a LUT to

dailies: importing into Final Cut, applying the Nick Shaw LUT,

exporting as ProRes again, importing into Avid then exporting as

DnxHD36 etc. As well as Gluetools, AlexICC etc

Kodak Cineon Log Files for DI

The Great Progression

Original Davinci 2K Plus

A year later one of the greatest

progressions in cinematography arrived (again). Davinci was

a very high end finishing hardware/software package for 35mm

productions. As the Film world (Labs and Kodak/Fuji) had been

decimated by digital Davinci was essentially about to enter

bankruptcy. A small Australian 'converter box' company, Black Magic

Design bought them and then released Resolve as software for £1000,

and to be run on an Apple Mac! This allowed everyone access to a

proper super-high end grading package for the first time. This was

something way beyond the limitations of Apple Color and incredibly

exciting. Other than allowing independent filmmakers using 5Ds and

other DSLRs the ability to use serious post tools, it allowed DITs to

significantly improve the quality (and ease of use) of making daily

deliverables. Everyone was happy.

Davinci Resolve on a Laptop!

In a very short space of time Black

Magic would release a free version of Davinci Resolve and Apple would

concurrently release the Macbook Retina. This combination made it

possible again for anyone really to back-up and create dailies for

commercials and medium budget features on a cheap laptop. Technology

had again expanded the possibilities of cinematography and allowed

into the hands of all.

Very large features were slightly

different. The big Post Houses or the very smart indie operations

had created very powerful datalabs. Essentially they went back to

the concept of overnight dailies. At the end of a shooting day

original camera cards (in some cases drives when a copy was made onset) were sent away, dailies

created and returned the next. Unlike at the beginning of the

digital revolution there was an important difference. The DIT had a

very different role, one very like the real origins (onset video

broadcast engineers). They would sit next to the DP and manipulate

the sensor signal, they would not back-up cards or transcode rushes

(though this would sometimes happen, especially as drives/ports are

becoming so fast). The technology to do this was incredibly

expensive; so expensive in fact, that only the very largest of

Hollywood features could afford to work this way. They would, to all

intents be 'Live Grading'. The equipment to facilitate this would be

expensive LUT boxes made by Truelight/Filmlight/Baselight or Pluto and very expensive software by

Colorfront or Iridas Onset. It's certainly nothing new, 3CP by Gamma

Density had developed their system years ago for using stills camera

images for passing colour information to Telecine Operators for 35mm

shoots.http://www.eetimes.com/document.asp?doc_id=1300609

Livegrade For All.

As with everything in the development

of Digital Cinema equipment progression has made prices significantly

cheaper for everyone to access. The latest technology has allowed the

current role of all DITs to develop even further by significantly

assisting DP's to have significant amounts of control over their

images. Namely, through cheap access to Livegrading. Blackmagic

were unsurprisingly involved, but not directly. In 2012 a German

company called Pomfort hacked a very cheap BlackMagic conversion box

– the HDLink.

As with everything in the development

of Digital Cinema equipment progression has made prices significantly

cheaper for everyone to access. The latest technology has allowed the

current role of all DITs to develop even further by significantly

assisting DP's to have significant amounts of control over their

images. Namely, through cheap access to Livegrading. Blackmagic

were unsurprisingly involved, but not directly. In 2012 a German

company called Pomfort hacked a very cheap BlackMagic conversion box

– the HDLink.

They created software, which surprisingly had not

been named this before – Livegrade. All this could be used with

any Macbook computer for around £500. Its genius was to bypass any

capture devices and rely on simple, previously developed technology.

What does a current DIT set-up look

like?

As we are discussing current DIT and

Cinematography equipment and conventions it makes sense to explain

one of the current working practices of this set-up in a little more

depth.

As the Alexa is by far the most used

Digital Camera on features and commercials it makes sense to use it

as an example.

Firstly, the kit and set-up, in

simplified form:

Non Livegrade set-ups would use an HDSDI

output from the Alexa straight to the monitor. This would either show

standard Rec709 signal, or an Alexa Look File (still in Rec709). If

you changed the Gamma setting in the Alexa to Log C the monitor would

display the LogC image. Great for displaying the recorded signal, not

so good for anyone else.

In order to apply a LUT to the monitor

there are various options. For our purposes we will use a Black Magic

HDLink Pro – these cost around £300. To fit this into our current

chain we have it between the camera and monitor. So,

Camera>HDLINK>Monitor.

The HDLINK is not a capture device, all

it does is to alter the signal between the camera output and the

monitor input. In order to manipulate the image you need to connect

it to a computer, any Macbook will do. The free software allows you

to add a single LUT. It connects to your laptop by USB2 (very little

processing) which enables you to change values between the HDSDI

signal.

This software is clunky and slow, and

not really useable other than burning a LUT into the box.

To manipulate the signal live you need

software such as Livegrade. The current version is Livegrade Pro 3.

You can connect any grading panel to the laptop (again by USB2), I

use Tangent Wave's though it's a bit big.

You can now alter Lift, Gamma, Gain,

Saturation and Curve non destructively. Grading from Log is a

massive leap forward from working with Rec709, doing this live

massively increases the options a cinematographer has available to

them. A new tool. But a livegrading system can offer up far more

creative possibilities which were more difficult to achieve in the

past.

One of the greatest benefits is an

option which was very common with film, but less so until recently

with digital (for various reasons, one being onset monitoring). The

DIT/DP in a Livegrade system will always have a remote iris control.

One of the greatest benefits is an

option which was very common with film, but less so until recently

with digital (for various reasons, one being onset monitoring). The

DIT/DP in a Livegrade system will always have a remote iris control. In combination with Livegrade you are offered the ability to 'print

up' or 'print down'. In practice this means you can control where

18% grey lies on your curve. And this can bring out a new

possibility for skintones. To illustrate this imagine your log skin

tones being 30%, you can open up your physical iris 0.9 stops to

roughly 40% and in Livegrade non destructively stop down.

In combination with Livegrade you are offered the ability to 'print

up' or 'print down'. In practice this means you can control where

18% grey lies on your curve. And this can bring out a new

possibility for skintones. To illustrate this imagine your log skin

tones being 30%, you can open up your physical iris 0.9 stops to

roughly 40% and in Livegrade non destructively stop down.

Your

Lutted image will pretty much look the same but you have changed

where your skintones lie on the recorded log signal. This is amazing

for various reasons, for creativity (skin tones do look different at

different points on the curve) and also great for dealing with noise

in shadows. Or vice-versa for adding texture.

Not a great example to be fair. Sorry!

With Lift, Gamma, Gain you have greater

flexibility with colour control. Rather than tint/white balance which

changes the overall image you can change parts of a scene. Highlights

can have warmth added, or taken away while shadows remain the same.

Early versions of Livegrade pretty much

allowed only this flexibility, with the benefit of saving

LUTs/Loading LUTs for various post packages. But there was little

metadata other than CDLs.

Livegrade Pro allows a capture card to

be used. This has two advantages. One is the timecode is referenced

to onset tweaks. Referencing timecode means that other software –

specifically Silverstack XT can read these tweaks. This means that

you have a great guarantee that what you are seeing onset will be

seen in the colourists suite.

The capture card also allows you to

refer a previous take with a current take. It's difficult to

understate how big a deal this is. Whole projects can be built up

around a film. To illustrate this think of the film Colour Palettes

that appear on various film blogs (colour schemes that various named

films use - http://www.digitaltrends.com/photography/cinema-palettes-twitter-account-color/). Greater control can be used to achieve these, and

maintain consistent skintones throughout. On shoot day 23 you can immediately bring up

a directly cutting scene from day 4 and wipe it across your live

screen etc. Or even shots from the same day, matching two or three

cameras or mis-matching IRNDs (different casts always occur) or throughout

weather changes. Combined with your iris control you have a serious

amount of creativity at your fingertips, all seen live.

The capture card also allows you to

refer a previous take with a current take. It's difficult to

understate how big a deal this is. Whole projects can be built up

around a film. To illustrate this think of the film Colour Palettes

that appear on various film blogs (colour schemes that various named

films use - http://www.digitaltrends.com/photography/cinema-palettes-twitter-account-color/). Greater control can be used to achieve these, and

maintain consistent skintones throughout. On shoot day 23 you can immediately bring up

a directly cutting scene from day 4 and wipe it across your live

screen etc. Or even shots from the same day, matching two or three

cameras or mis-matching IRNDs (different casts always occur) or throughout

weather changes. Combined with your iris control you have a serious

amount of creativity at your fingertips, all seen live.

This can then all be put into a single

document for referring to in post, cdl and 3D LUT. It's staggering how much control

the DP has lost to Post and some options for taking back this control

are essential. So many people shoot and leave it to the grade. This

is no longer enough. By the time your footage has gone to the

cutting room, or even the dailies - if your look is not stamped in

people will forget your intended look. And by that time it is very

difficult to convince people to return to your intended look.

Remember the early days of LogC? That is why so many commercials had

that washed out look – because directors and producers could not

see it any other way after the cut.

How does a DIT facilitate this

system for a DP?

Unsurprisingly every production

requires different methodologies. The reasons for this are usually

down to budget, location logistics, camera system used but

principally to fitting within a DPs method of working. At it's

heart, a DIT is just another tool to help a DP. And like other tools

available they are used in very different ways to achieve the most

suitable photography for the story being told. Because of this a DIT

in addition to understanding the technical aspects of their job have

to understand the political, and in many instances this is far more

difficult. Every DP has a different way of working and every DP has

a different personality. Previous working relationships help

enormously – especially with new technology and thus different

approaches to using that but you have to very quickly adapt to

defining boundaries. It is imperative to understand that you are

being hired to assist the DP. Some DP's like feedback, suggestions

and initiative while others prefer you to do as you are told. It is

important to be impartial and work within the boundaries presented

and understand neither approach is better than another. With

experience it quickly becomes apparent how to approach a single

production.

In general, on a production using

Livegrade - like most other productions a DP will light to a certain

T-stop. Often this would be around 2.8/4 at 800 ASA. Though this

can change, especially for low light scenes and depending on lenses

used so can change throughout the shoot.

Sometimes a DP will be with you for the

entire shoot, sometimes they will spend a percentage with the

director and sometimes they will operate and spend very little time

with you. Responsibilities and expectations will change depending on

how they wish to work.

A simple, one camera setup. A DIT blackout tent would be used in most cases (but it gets very dark in there for photos).

For DITs not used to working with

Livegrade one of the surprising differences is that you will mostly

be in control of the exposure. You will be in charge of a remote iris

for each camera and it is your duty to set and maintain the t-stop.

This is fairly simple if the DP is lighting to a defined stop with

the gaffer in a studio situation. Your duty is to provide the DP

with a good negative, so use of a waveform monitor is essential, as

well as a properly calibrated grade 1 monitor. Some DP's, if not at

your monitor, will allow you to liaise with the lighting department –

usually the gaffer who will often be at the monitor with you. You

can work with them to ensure highlights are not burning out and to

fill any any areas of the image that have no light on them. Unlike

on film, black areas have no information and allow the introduction

of noise. A simple fill can be 'graded down' with a curve to make the

image appear black but still record code values. Quite often you will

print down with Livegrade to maintain a thicker negative if stop

allows.

Some DP's prefer to deal with this

themselves but passing on these small tweaks to a DIT, rather than

loosing any creative control really just frees them to concentrate on

other aspects of the photography.

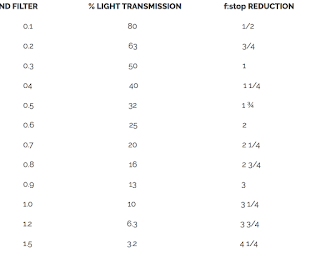

Exposure filters are also handed over

to the DIT to deal with in most cases. At this point it's

appropriate to mention that you will be in radio contact with the

camera assistants – passing on instructions from the DP, or

exposure/colour/menu instructions from yourself. Mostly your

discussions will be the introduction or removal of ND filters, and

it's vital you understand how these effect the image very quickly.

If shooting outside you still want to maintain a consistent shooting

stop, so if you are shooting at around a 2.8/4 you want to ND up to

achieve this. Again you have Livegrade to print up or down with. It

becomes trickier with constantly changing light conditions, and even

trickier when different lenses are being used.

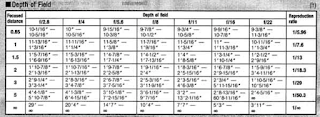

You have to be aware

of the size and type of lens being used in certain set-ups. If

A-Camera is shooting on a 25mm lens at 2.8 with a 1.8 ND it's not

appropriate to have the same stop on a B-Camera shooting 48fps on a

300mm lens. So you will have to change your ND and stop to give the

focus puller a chance.

Also, if one camera is using a brand new

Leica lens and another is using a 20 year old zoom lens it's probably

not appropriate to have both wide open as the old lens will not work

to its best ability. This can become hectic so detailed notes have

to be taken otherwise you will end up with a very sore head. To give

you an even bigger headache, some cameras have different base ASA

ratings so making calculations to match multiple cameras can be very

tricky (ASA, stop, shutter Angle, FPS, ND, lens size, lens type all

need to be taken into consideration). Other than for artistic

reasons it's not necessary to burden a DP with these as a DIT can do

them - in much the same way a DP does not have to pull focus, operate

a camera, move a light, mark a clapperboard, write negative report

sheets.

USE APPS LIKE P-CAM!

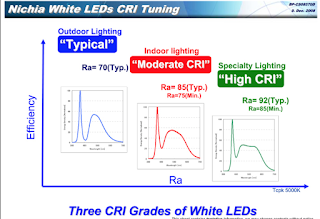

Colour theory is important to

understand. Some DP's keep things simple, others have very elaborate

ways to achieve different skintones. A DP recently wanted to

eliminate magenta from the spectrum as he thought it looked too 'video-ish'. To achieve this he placed colour filters on the camera,

with the opposite filters on the lights. This created a very green image

which he would, in Livegrade time to a 'normal' image. The image

before the colour filters and the end result looked very similar but on

close inspection on a Vector-scope you would notice that magenta had

mostly disappeared and it did look subtly nicer. It was an elaborate

process involving complicated stop and white balance calculations but

the end result looked fantastic. Achieving this without Livegrade,

and the ability to bring up previous shots to grade to –

essentially maintaining a consistent skintone throughout the film

would be very difficult if not impossible without having producers

question the dailies.

Livegrade has freed up DP's time and

allowed a greater flexibility in onset control, and as importantly a

greater quality control on the rushes sent to editorial from set.

What is next?

Who knows. Already most cameras are integrating Livegrade into their onboard software. The process can only get smaller, faster and better. The software can be controlled with WiFi, WiFi that works unlike other manufacturers earlier attempts.

ASC CDLs, LiveGrade and LUTs should all form part of a cinematographers toolkit. Control must be given back to the cinematographer and the 'lab' should return to their world. DPs don't necessarily have to have in depth knowledge of bit-rates, codecs or file formats - DITs can do that for them but maintaining control of an image can be achieved with new tools. So much damage has already happened but pushing for these options on shoots, stamping your authorship on images is more important now than it ever has been. The irony with cameras with so much latitude and deep bit-depth is that they offer up options for post and producers long after a DP has left a project. The final grade is not always the place for offering up your original intentions. Often, long before final colouring takes place others have made decisions often based on what they saw in the edit and this should not continue. The great thing is this actually is now starting to happen but only for the DPs who push for this. The equipment is now so inexpensive that looks can be set, onset in most situations now.

Obviously this article does not cover every aspect of what a DIT does, it's written from a singular perspective and many productions, DITs and DPs work and operate very successfully in different ways. This is good as cinema is meant to full of diversity, that's what creates great images.

`

No comments:

Post a Comment